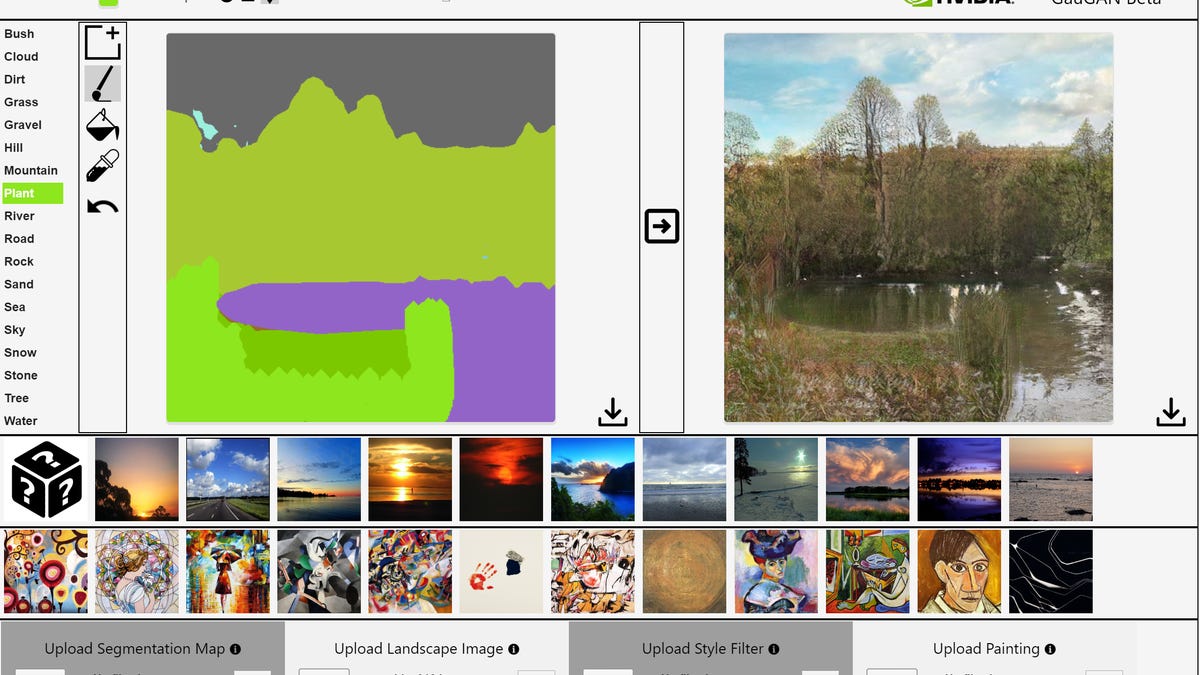

Deepfake nature with Nvidia GauGAN

At Siggraph 2019, Nvidia showed off its AI-based technology for turning scribbles into realistic scenes.

Though GauGAN's output has some artifacts, like weird smearing where it doesn't seem to be sure of the layering, it can perform advanced operations like adding reflections, that add a more realistic feel to the scenes.

Nvidia's GauGAN -- one of the company's technology showcases at the graphics-research-focused Siggraph show this week -- is one of the coolest uses for AI in graphics that I've seen in a while. Essentially, it lets you paint with smart fills and brushes that are based on real-world images.

It starts with an image that it deconstructs into a segmentation map -- a breakdown of the image by object types such as sky, grass, mountains and clouds -- or you can start from scratch and scribble in the various regions yourself. Then it uses the GAN-based content to fill in each area with the appropriate type of artificially-generated images. You can also apply generated styles based off real paintings.

It has the potential to be a huge timesaver for all sorts of designers.

GauGAN, which debuted in March and has been publicly available on Nvidia's web site for about a month, is just the latest in a series of Nvidia's projects to showcase the company's Generalized Adversarial Network-focused AI research, which include its StyleGAN-based deepfake generator and older face-aware fill-in.

Other tech the company is showing off at Siggraph includes an AR headset that incorporates foveated rendering, a technique which prioritizes rendering quality for the parts of a scene you're looking at to save processing power. It's usually used for VR, which is less processing-intensive than AR, because it doesn't have to worry about overlaying on the real world.

And because the Apollo 11 anniversary is such a hot topic that people are creating butter sculptures of the crew, Nvidia's highlighting its Omniverse platform to put attendees virtually on the moon using its AI pose estimation, a combination of motion capture, AI and its RTX ray-tracing technology.